Data Normalization in IT Asset Management: Why It Matters

Data Normalization helps organize and standardize your IT data. Understand 1NF to BCNF, why it matters, and how it simplifies asset tracking and reporting.

Many IT teams deal with asset records that are inconsistent or messy. A single device might appear in the system as “Dell Laptop,” “DELL,” or “Dell Inc.” These small differences add up, creating confusion, duplicate entries, and unreliable reports. Over time, this makes it harder to keep track of what the organization actually owns or uses.

In IT Asset Management, consistent data is key. Clear, standardized records help teams understand the full picture of their hardware and software, reduce errors, and improve decision-making.

Data normalization is the process that brings structure to this chaos. It ensures that information follows the same format across all records, making asset data easier to manage and use.

What Is Data Normalization?

Data normalization is the process of cleaning and organizing information so that data is stored in a consistent structure, reducing errors and inconsistencies. It focuses on removing duplicates, fixing inconsistent entries, and making sure all values are stored in a standard format.

For example, a company name might appear as “HP,” “H.P.,” or “Hewlett-Packard” in different parts of a dataset. These variations can create update anomalies that can be easily avoided with normalization. Even though they all refer to the same thing, they can be treated as separate entries. Normalization brings these variations together into one consistent value, such as “HP.”

This process makes data easier to search, sort, and analyze. It also helps reduce errors and improve the quality of reports. Normalization is especially helpful when information comes from many sources or is entered by different people over time.

By creating clear, consistent data, teams can work more efficiently and make better decisions based on accurate information.

Who Needs Data Normalization?

Any team or organization that works with large amounts of data can benefit from normalization. When information comes from different sources or is handled by multiple people, it often ends up messy and inconsistent. Normalization helps clean it up and keep it that way.

Teams that rely on accurate records to make decisions, generate reports, or manage daily operations are especially in need of consistent data. This includes anyone working with inventory, vendors, software, user details, or financial records.

Some common situations where normalization is needed:

- Combining data from spreadsheets, databases, or third-party tools

- Auditing records that have duplicates or missing fields

- Importing old data into a new system

- Managing vendor or product names that are entered in different formats

Without standardization, small errors can lead to bigger issues like incorrect totals, update anomalies, duplicate entries, or failed reports due to data integrity issues. Normalization helps prevent those problems and creates a more reliable foundation for any system that relies on data.

Read also: What is Denormalization in Databases?

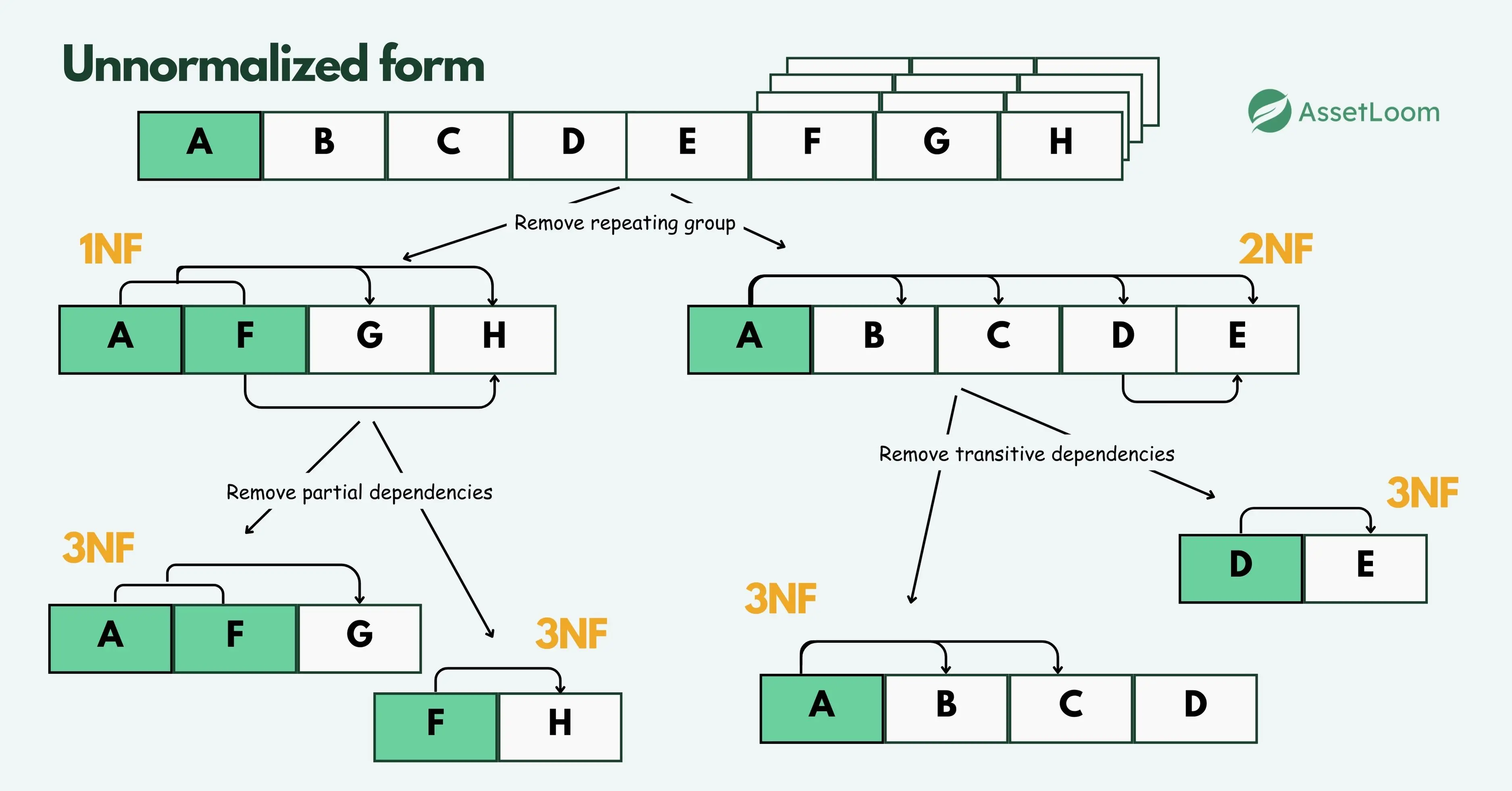

4 Types Of Data Normalization In Databases

In database design, normalization helps structure data so that it’s efficient, consistent, and free from unnecessary duplication, which is critical for maintaining data integrity. This is done by organizing the data into different "normal forms." Each form builds on the previous one and solves a specific type of problem.

Here are the four main types:

1. First Normal Form (1NF)

First Normal Form, or 1NF, is the first and most basic step in organizing a database. It lays the groundwork for clean, reliable data by setting a few important rules that help avoid confusion and reduce errors.

A table is considered to be in 1NF when it meets the following conditions, ensuring that each record has its own unique identifier and reduces redundancy:

- Each field contains only atomic values: This means every column holds just one value per row. No lists, arrays, or combined items in a single field.

- Every record is unique and has a primary key: There must be a way to uniquely identify each row in the table, usually with a primary key like an ID number.

- No repeating groups or multiple columns for similar data: Each piece of data should live in its own row and column, not be repeated or grouped together in a single row.

These rules help eliminate redundancy, simplify queries, and improve data consistency across the system.

Example of a 1NF Violation

Let’s say you’re working with an e-commerce order table. When customers buy multiple items in a single order, it might look like this:

| Order_ID | Customer_Name | Products Ordered |

|---|---|---|

| 1001 | John Doe | Laptop, Mouse |

| 1002 | Jane Smith | Phone, Headphones |

At first glance, this may seem fine, but the 'Products Ordered' column contains multiple values. This structure breaks 1NF because the data is not atomic. It’s harder to search, filter, or analyze individual products when they’re stored together like this.

How to Fix It (Applying 1NF)

To follow 1NF, the table should be restructured so that each field contains just one value:

| Order_ID | Customer_Name | Product |

|---|---|---|

| 1001 | John Doe | Laptop |

| 1001 | John Doe | Mouse |

| 1002 | Jane Smith | Phone |

| 1002 | Jane Smith | Headphones |

Now, each row contains a single product per order, making the data cleaner and much easier to work with. It becomes simple to run reports like “How many phones were sold?” or “Which customers ordered a mouse?”

2. Second Normal Form (2NF)

Second Normal Form, or 2NF, builds on the structure created by 1NF. Once a table contains only atomic values and has a clear primary key, the next step is to eliminate partial dependencies..

A table is in 2NF when:

- It is already in 1NF

- All non-key columns depend on the entire primary key, not just part of it

This rule is especially important when a table uses a composite primary key (a key made from more than one column).

Why it matters

When only part of a key determines a value, it creates redundancy and makes updates more difficult. 2NF removes these partial dependencies and separates data into related tables for better structure.

Example of a 2NF Violation

Imagine a course enrollment table where students can enroll in multiple courses, and each course has an instructor.

| Student_ID | Course_ID | Student_Name | Course_Name | Instructor |

|---|---|---|---|---|

| S001 | C101 | Alice | Math | Mr. Taylor |

| S001 | C102 | Alice | Physics | Ms. Lee |

| S002 | C101 | Bob | Math | Mr. Taylor |

The composite key here is (Student_ID, Course_ID). However:

- Student_Name depends only on Student_ID

- Course_Name and Instructor depend only on Course_ID

These are partial dependencies, meaning the table violates 2NF.

How to Fix It (Applying 2NF)

We separate the data into three tables to remove the partial dependencies:

Students Table

| Student_ID | Student_Name |

|---|---|

| S001 | Alice |

| S002 | Bob |

Courses Table

| Course_ID | Course_Name | Instructor |

|---|---|---|

| C101 | Math | Mr. Taylor |

| C102 | Physics | Ms. Lee |

Enrollments Table

| Student_ID | Course_ID |

|---|---|

| S001 | C101 |

| S001 | C102 |

| S002 | C101 |

Now, each table stores data that depends only on a single key. This structure avoids duplication and makes updates easier. For example, if an instructor’s name changes, it only needs to be updated in one place.

3. Third Normal Form (3NF)

After a table meets the rules of 1NF and 2NF, the next step is Third Normal Form (3NF). This level focuses on removing transitive dependencies—when a non-key column depends on another non-key column instead of depending directly on the primary key.

A table is in 3NF when:

- It is already in 2NF

- All non-key columns depend only on the primary key and not on other non-key columns

Why it matters

When values rely on other non-key data, any updates or changes can cause inconsistencies. 3NF simplifies maintenance and ensures that each piece of information lives in only one place.

Example of a 3NF Violation

Let’s look at a simplified employee table:

| Employee_ID | Employee_Name | Department_ID | Department_Name |

|---|---|---|---|

| E001 | Alice | D01 | Finance |

| E002 | Bob | D02 | Marketing |

| E003 | Charlie | D01 | Finance |

The primary key here is Employee_ID. But notice:

- Department_Name depends on Department_ID, not directly on Employee_ID.

That’s a transitive dependency, which violates 3NF.

How to Fix It (Applying 3NF)

To follow 3NF, we split the data into two tables:

Employees Table

| Employee_ID | Employee_Name | Department_ID |

|---|---|---|

| E001 | Alice | D01 |

| E002 | Bob | D02 |

| E003 | Charlie | D01 |

Departments Table

| Department_ID | Department_Name |

|---|---|

| D01 | Finance |

| D02 | Marketing |

Now, each piece of information depends only on its direct key. If the department name ever changes, it only needs to be updated once in the Departments table.

4. Boyce-Codd Normal Form (BCNF)

Boyce-Codd Normal Form (BCNF) is a more advanced version of Third Normal Form. It handles certain edge cases where a table meets 3NF but still allows some redundancy due to overlapping candidate keys.

A table is in BCNF when:

- It is already in 3NF

- Every determinant is a candidate key

A determinant is a column (or set of columns) that can uniquely determine another column. A candidate key is a minimal set of columns that can uniquely identify a row.

Why it matters

Even in a 3NF-compliant table, dependencies can exist that lead to inconsistencies or duplicated data. BCNF ensures that these cases are addressed by tightening the rules even further.

Example of a BCNF Violation

Let’s say there’s a table that tracks which instructor teaches which course in which room:

| Course | Instructor | Room |

|---|---|---|

| Math | Smith | R101 |

| Physics | Lee | R102 |

| Math | Smith | R101 |

Assume:

- Each course is taught by only one instructor

- Each room is assigned to only one instructor

Here, both Course → Instructor and Room → Instructor are valid dependencies. But neither Course nor Room alone may be the primary key—perhaps the actual primary key is (Course, Room).

This means:

- There’s a dependency (Room → Instructor) where the determinant (Room) is not a candidate key. That violates BCNF.

How to Fix It (Applying BCNF)

To resolve the issue, we split the table based on the functional dependencies.

Courses Table

| Course | Instructor |

|---|---|

| Math | Smith |

| Physics | Lee |

Rooms Table

| Room | Instructor |

|---|---|

| R101 | Smith |

| R102 | Lee |

Now, each determinant is a candidate key in its table. Redundancy is reduced, and the structure is clearer and more maintainable.

3 Data Normalization Techniques & Formulas

While database normalization focuses on the structure of tables and relationships, data normalization techniques often refer to adjusting values into a standard range or format, especially useful in reporting, analytics, or data science.

These techniques help scale numeric data so it's easier to compare and work with. Here are three common methods:

1. Min-Max Normalization

Min-Max Normalization is a technique used to scale numeric values into a fixed range, typically between 0 and 1. This helps bring all values into the same scale, making it easier to compare them or use them in models or reports.

Why it’s useful

Raw data can have wide value ranges. For example, asset costs might range from $500 to $50,000. Comparing these directly can be difficult and misleading. By normalizing the data, everything is placed on the same scale and becomes easier to understand and work with.

The Formula

Normalized Value = (X - Min) / (Max - Min)

Where:

- X is the original value

- Min is the minimum value in the dataset

- Max is the maximum value in the dataset

Example

Imagine you have the following asset costs:

- Min = $1,000

- Max = $5,000

- Original Value (X) = $3,000

Apply the formula:

(3000 - 1000) / (5000 - 1000) = 2000 / 4000 = 0.5

So, $3,000 is normalized to 0.5 on the scale from 0 to 1.

Use Cases

- Comparing hardware costs across departments

- Visualizing performance metrics on dashboards

- Feeding data into algorithms or scoring systems

Important Notes

- Min-Max keeps the shape of the original distribution

- It’s sensitive to outliers. One unusually high or low value can affect the scale

- Often used when the range is known and stable

2. Z-Score Normalization (Standardization)

Z-score normalization, also known as standardization, transforms data based on how far each value is from the mean. Instead of fitting values between 0 and 1 like Min-Max, it measures how many standard deviations a value is above or below the average.

Why it’s useful

This method is helpful when you want to understand how extreme or typical a value is compared to the rest of the dataset. It’s especially useful when data has different scales or needs to be compared across groups.

The Formula

Z = (X - Mean) / Standard Deviation

Where:

- X is the original value

- Mean is the average of the dataset

- Standard Deviation measures how spread out the values are

Example

Suppose you’re analyzing the number of support tickets resolved per technician.

- Mean = 40 tickets

- Standard Deviation = 5

- Technician A resolved 50 tickets

Apply the formula:

Z = (50 - 40) / 5 = 10 / 5 = 2

So, Technician A’s result has a Z-score of 2, meaning their performance is 2 standard deviations above the average.

Use Cases

- Identifying outliers (unusually high or low values)

- Comparing values from different units or categories

- Preparing data for machine learning models that expect standardized input

Important Notes

- The result can be negative (below average), zero (equal to average), or positive (above average)

- Works well for datasets that follow a normal distribution

- Unlike Min-Max, Z-scores are not limited to a specific range

3. Decimal Scaling

Decimal Scaling is a straightforward way to normalize data by moving the decimal point. This is done by dividing each value by a power of 10, depending on how large the biggest number in the dataset is.

Why it’s useful

This method quickly reduces large numbers to smaller, easier-to-handle values. It's often used when you don’t need precise scaling like Min-Max or Z-score, but just want the numbers to be within a similar range.

The Formula

Normalized Value = X / 10^j

Where:

- X is the original value

- j is the smallest integer such that all resulting values are less than 1

Example

Let’s say you have asset serial numbers:

- 12345

- 67890

- 98765

The largest number is 98765. We want all values to be less than 1, so:

j = 5 (because 10^5 = 100000)

Now divide each value by 100000:

- 12345 / 100000 = 0.12345

- 67890 / 100000 = 0.67890

- 98765 / 100000 = 0.98765

All values are now normalized and less than 1.

Use Cases

- Scaling ID numbers or transaction codes for charts or calculations

- Preparing data for lightweight processing tasks

- When exact value ranges aren’t critical, just consistency

Important Notes

- Simple and fast to apply

- Less precise than other methods

- Best used when the range of values is known and not too wide

The Role of Data Normalization in IT Asset Management

Managing IT assets involves collecting and storing data about hardware, software, licenses, users, departments, vendors, and more, ensuring consistency across systems. This data often comes from many sources, such as manual entry, procurement systems, support tickets, or imports from spreadsheets. When each source uses slightly different formats, the result is inconsistent and messy data.

That’s where data normalization plays a key role.

Why normalization matters

In IT Asset Management, even small inconsistencies can lead to bigger problems. For example:

- The same manufacturer might be listed as “Dell,” “DELL Inc.,” or “Dell Computers.”

- Locations might be recorded as “NY,” “New York,” or “N.Y.”

- One asset could accidentally appear twice with slightly different names or codes.

These differences may seem minor, but they can cause insert, update, and delete anomalies that disrupt reporting, asset tracking, and data accuracy. Searching for “Dell” assets may leave out devices listed under a different variation. Reports may show inaccurate counts. Audits take longer, and decisions are based on unreliable data.

How normalization helps

Normalization helps by:

- Standardizing asset names, locations, and vendors so they’re consistent across records

- Reducing redundancy and duplicates that result from small variations in data entry, enhancing the overall data integrity

- Improving search and filtering by using predictable formats

- Making reports accurate and meaningful

- Supporting automation by using clean, structured data that systems can trust

Example scenario

An organization tracking 5,000 devices may have over 20 different ways of listing the same vendor. Once the data is normalized, it becomes easier to:

- Group assets by vendor or department

- Identify underused or missing devices

- Prepare clean, audit-ready reports in less time

Data normalization makes IT asset data easier to manage, more reliable to work with, and stronger for long-term planning.

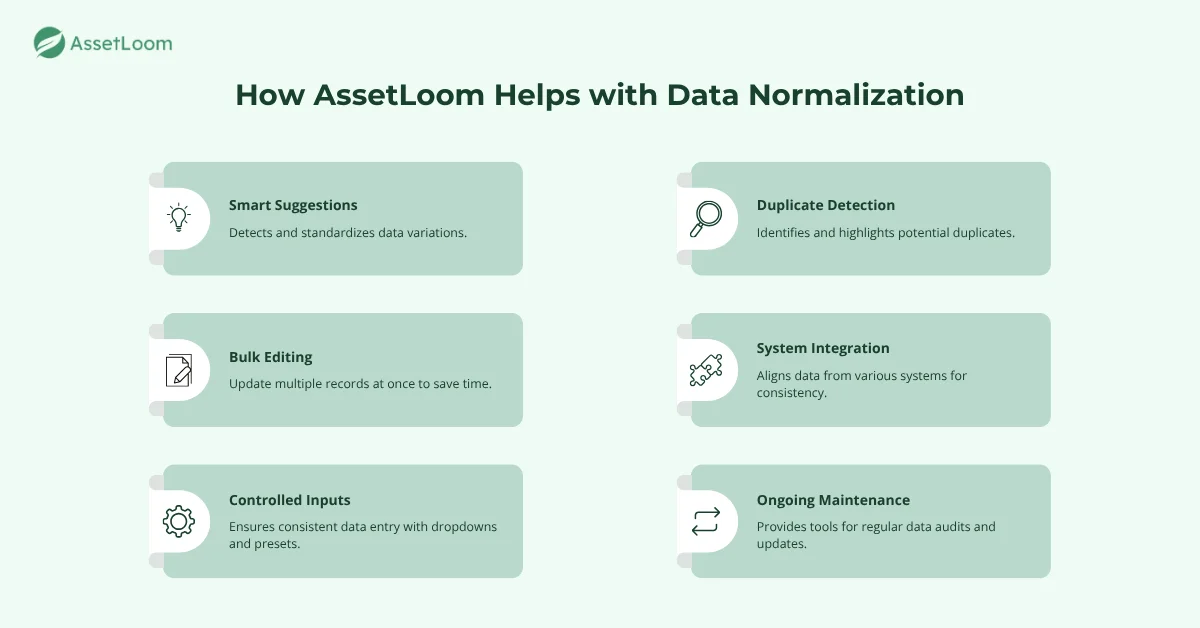

How AssetLoom Can Help With Data Normalization

Managing IT assets data manually can be time-consuming, especially when you're dealing with thousands of records across different systems and teams, each handling insert, update, and delete operations. AssetLoom offers built-in features to make data normalization faster, easier, and more reliable.

Here’s how it helps:

1. Smart Suggestions for Standardizing Fields

When new data is added, AssetLoom can detect variations and recommend standard values. For example, if someone enters “Hewlett Packard” but “HP” is the preferred label, the system can flag or auto-correct the entry.

2. Bulk Editing Tools

AssetLoom lets you update multiple records at once. Whether you're correcting manufacturer names, fixing location tags, or merging duplicates, batch actions save time and reduce errors.

3. Controlled Inputs and Dropdowns

To prevent inconsistent data entry, AssetLoom allows you to use dropdown menus and preset values for fields like department, asset type, or vendor. This ensures everyone is using the same format from the start.

4. Duplicate Detection

The platform can identify and highlight potential duplicate assets, even when entries aren't exact matches. This helps clean up the data and avoid confusion during reporting or audits.

5. Integration with Existing Systems

Data pulled from procurement, HR, or support systems often comes in different formats. AssetLoom helps align this incoming data with your existing structure so that it’s consistent across the board.

6. Ongoing Maintenance Support

As your asset database grows, so does the risk of inconsistency. AssetLoom provides tools to regularly audit and validate data, keeping things clean and up to date over time.

By using these features, teams spend less time fixing errors and more time using reliable data that maintains data integrity to make informed decisions.

Frequently Asked Questions

1. Why is data normalization important for IT asset management?

Data normalization ensures consistent, accurate, and reliable asset records. It helps eliminate duplicates, reduces errors, and improves the overall quality of your reports, making it easier to track and manage assets across the organization.

2. How does data normalization impact database performance?

Normalization improves database performance by reducing redundancy and ensuring that data is structured efficiently. It helps optimize queries, making data retrieval faster and more reliable, which is crucial when managing large volumes of asset data.

3. What are some common challenges when implementing data normalization?

Common challenges include handling data inconsistencies from multiple sources, deciding which normalization method to apply, and ensuring all team members follow the same format. Using automated tools like AssetLoom can help streamline the process and reduce manual errors.

Related Blogs

Subscribe for Expert Tips and Updates

Receive the latest news from AssetLoom, right in your inbox.